Utilizing Graph Neural Networks to Improving Dialogue-based Relation Extraction

摘要

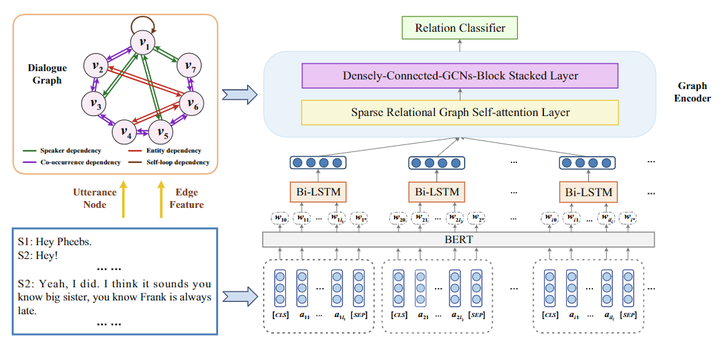

Relation extraction has been an active research interest in the field of Natural Language Processing (NLP). The past works primarily focused on a corpus of formal text which is inherently non-dialogic. Recently, the dialogue-based relation extraction task, which detects relations among speaker-aware entities scattering in dialogues, has been gradually arousing people’s attention. Some sequence-based neural methods have been carried out to obtain the relevant information. However, identifying cross-sentence relations remains unsolved, especially in the context of a specific-domain dialogue system. In this paper, we propose a Relational Attention Enhanced Graph Convolutional Network (RAEGCN), which constructs the whole dialogue as a semantic interactive graph by emphasizing the speaker-related information and leveraging various inter-sentence dependencies. A dense connectivity mechanism is also introduced to empower the multi-hop relational reasoning across sentences, which can capture both local and non-local features simultaneously. Experiments show the significant superiority and robustness of our model on a real-world dataset DialogRE, as compared with previous approaches.