Learning Label-Relational Output Structure for Adaptive Sequence Labeling

摘要

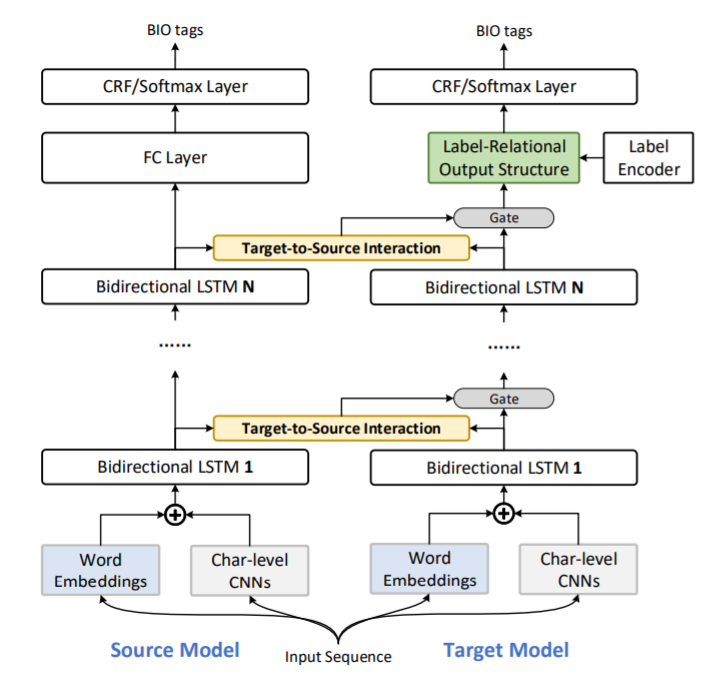

Sequence labeling is a fundamental task of natural language understanding. Recent neural models for sequence labeling task achieve significant success with the availability of sufficient training data. However, in practical scenarios, entity types to be annotated even in the same domain are continuously evolving. To transfer knowledge from the source model pre-trained on previously annotated data, we propose an approach which learns label-relational output structure to explicitly capturing label correlations in the latent space. Additionally, we construct the target-to-source interaction between the source model M S and the target model M T and apply a gate mechanism to control how much information in M S and M T should be passed down. Experiments show that our method consistently outperforms the state-of-the-art methods with a statistically significant margin and effectively facilitates to recognize rare new entities in the target data especially.