Improving Abstractive Dialogue Summarization with Graph Structures and Topic Words

摘要

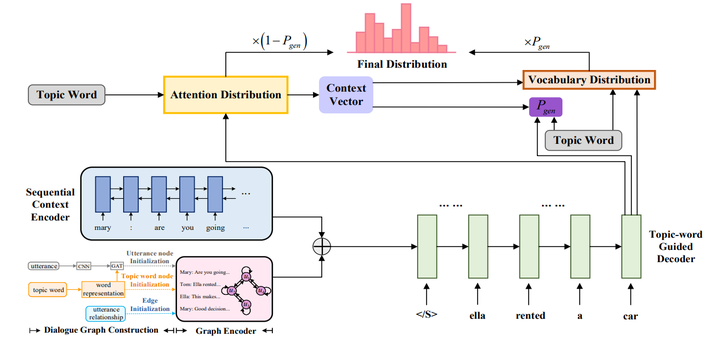

Recently, people have been beginning paying more attention to the abstractive dialogue summarization task. Since the information flows are exchanged between at least two interlocutors and key elements about a certain event are often spanned across multiple utterances, it is necessary for researchers to explore the inherent relations and structures of dialogue contents. However, the existing approaches often process the dialogue with sequence-based models, which are hard to capture long-distance inter-sentence relations. In this paper, we propose a Topic-word Guided Dialogue Graph Attention (TGDGA) network to model the dialogue as an interaction graph according to the topic word information. A masked graph self-attention mechanism is used to integrate cross-sentence information flows and focus more on the related utterances, which makes it better to understand the dialogue. Moreover, the topic word features are introduced to assist the decoding process. We evaluate our model on the SAMSum Corpus and Automobile Master Corpus. The experimental results show that our method outperforms most of the baselines.