Adversarial Self-Supervised Learning for Out-of-Domain Detection

摘要

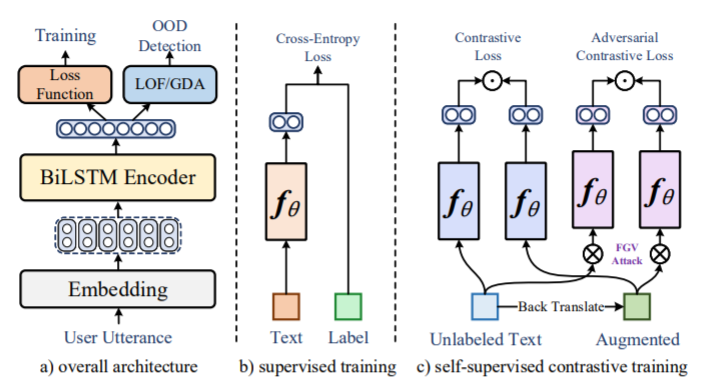

Detecting out-of-domain (OOD) intents is crucial for the deployed task-oriented dialogue system. Previous unsupervised OOD detection methods only extract discriminative features of different in-domain intents while supervised counterparts can directly distinguish OOD and in-domain intents but require ex tensive labeled OOD data. To combine the benefits of both types, we propose a self-supervised contrastive learning framework to model discriminative semantic features of both in-domain intents and OOD intents from unlabeled data. Besides, we introduce an adversarial augmentation neural module to improve the efficiency and robustness of contrastive learning. Experiments on two public benchmark datasets show that our method can consistently outperform the baselines with a statistically significant margin.