ADPL: Adversarial Prompt-based Domain Adaptation for Dialogue Summarization with Knowledge Disentanglement

摘要

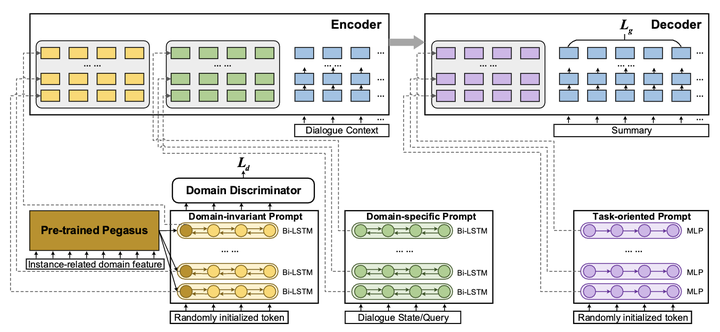

Traditional dialogue summarization models rely on a large-scale manually-labeled corpus, lacking generalization ability to new domains, and domain adaptation from a labeled source domain to an unlabeled target domain is important in practical summarization scenarios. However, existing domain adaptation works in dialogue summarization generally require large-scale pre-training using exten- sive external data. To explore the lightweight fine-tuning methods, in this paper, we propose an efficient Adversarial Disentangled Prompt Learning (ADPL) model for domain adaptation in dialogue sum- marization. We introduce three kinds of prompts including domain- invariant prompt (DIP), domain-specific prompt (DSP), and task- oriented prompt (TOP). DIP aims to disentangle and transfer the shared knowledge from the source domain and target domain in an adversarial way, which improves the accuracy of prediction about domain-invariant information and enhances the ability for generalization to new domains. DSP is designed to guide our model to focus on domain-specific knowledge using domain-related features. TOP is to capture task-oriented knowledge to generate high-quality summaries. Instead of fine-tuning the whole pre-trained language model (PLM), we only update the prompt networks but keep PLM fixed. We conduct zero-shot experiments and build domain adaptation benchmarks on two multi-domain dialogue summarization datasets, TODSum and QMSum. Adequate experiments and analysis prove our method significantly outperforms full-parameter fine-tuning with even fewer parameters.