Modeling Discriminative Representations for Out-of-Domain Detection with Supervised Contrastive Learning

Abstract

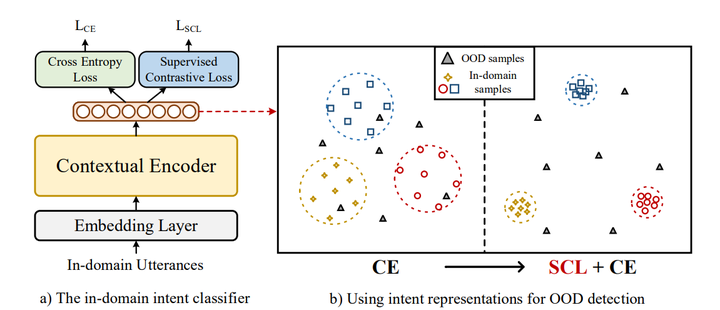

Detecting Out-of-Domain (OOD) or unknown intents from user queries is essential in a task oriented dialog system. A key challenge of OOD detection is to learn discriminative se mantic features. Traditional cross-entropy loss only focuses on whether a sample is correctly classified, and does not explicitly distinguish the margins between categories. In this pa per, we propose a supervised contrastive learn ing objective to minimize intra-class variance by pulling together in-domain intents belong ing to the same class and maximize inter-class variance by pushing apart samples from differ ent classes. Besides, we employ an adversar ial augmentation mechanism to obtain pseudo diverse views of a sample in the latent space. Experiments on two public datasets prove the effectiveness of our method capturing discrim inative representations for OOD detection.