Give the Truth:Incorporate Semantic Slot into Abstractive Dialogue Summarization

Abstract

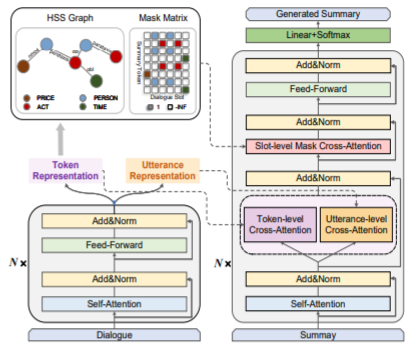

Abstractive dialogue summarization suffers from a lots of factual errors, which are due to scattered salient elements in the multi-speaker information interaction process. In this work, we design a heterogeneous semantic slot graph with a slot-level mask cross-attention to enhance the slot features for more correct summarization. We also propose a slot-driven beam search algorithm in the decoding process to give priority to generating salient elements in a limited length by “filling-in-the-blanks”. Besides, an adversarial contrastive learning assisting the training process is introduced to improve the generalization of our model. Experimental performance on different types of factual errors shows the effectiveness of our methods and human evaluation further verifies the results.