A Finer-grain Universal Dialogue Semantic Structures based Model For Abstractive Dialogue Summarization

Abstract

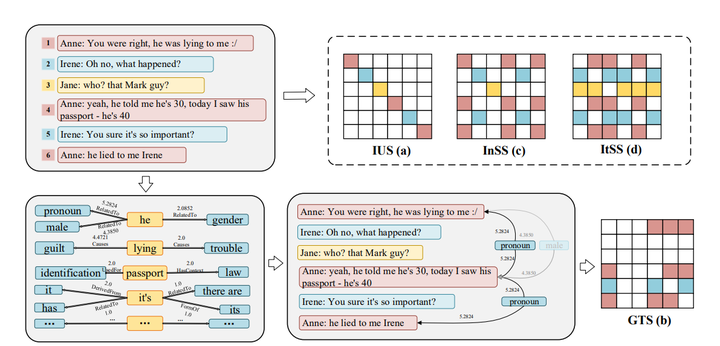

Although abstractive summarization models have achieved impressive results on document summarization tasks, their performance on dialogue modeling is much less satisfactory due to the crude and straight methods for dialogue encoding. To address this question, we pro pose a novel end-to-end Transformer-based model FinDS for abstractive dialogue summarization that leverages Finer-grain universal Dialogue semantic Structures to model dialogue and generates better summaries. Experiments on the SAMsum dataset show that FinDS outperforms various dialogue summarization approaches and achieves new state of-the-art (SOTA) ROUGE results. Finally, we apply FinDS to a more complex scenario, showing the robustness of our model. We also release our source code.